Introduction

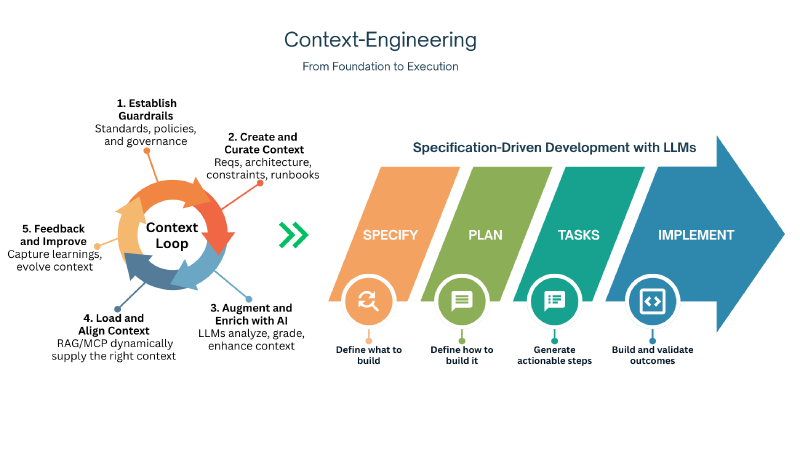

This is where Context Engineering comes in: the discipline of designing, curating, and maintaining structured, high-quality context across the SDLC so AI systems can operate effectively within enterprise boundaries.

1. From Built-In Quality to High-Quality Context

Every artifact we create through the SDLC — from requirements to architecture, from test cases to runbooks — contributes to the context fabric of our organisation. When quality is built in at each step, that fabric becomes strong, consistent, and richly descriptive — the perfect substrate for AI augmentation.

Within this context are:

- High-quality requirements — acceptance criteria, use cases, and user journeys

- Architecture artifacts — key business drivers, architecture characteristics, risk analysis, NFRs, fitness functions, and diagrams

- Enterprise constraints — standards, decisions, patterns, tooling, technology, security

- Operational knowledge — runbooks, observability frameworks, and lessons learned

- Consistent engineering practices — standards, quality targets, templates, automation, and metrics

Together, these form a shared system of understanding — the living context that explains not only what we are building, but how and why we build it that way.

Every time we work with an AI system, we must ensure that this context — from enduring architectural principles to the most recent design decisions — is available and relevant. Mechanisms such as RAG (Retrieval-Augmented Generation) and MCP (Model Context Protocol) allow us to dynamically load and update this information, ensuring every interaction starts well-informed and stays aligned as work evolves.

And here’s where it gets interesting: The same LLMs that depend on high-quality context can also help us maintain and improve it.

AI can assist in:

- Reviewing and grading artifacts for completeness or consistency

- Detecting drift or outdated information

- Suggesting missing dependencies or unclear logic

- Generating structured documentation and linking related artifacts automatically

This creates a reinforcing feedback loop — humans produce and refine context, LLMs enhance and maintain it, and together they raise the quality bar over time.

2. Why Context Matters: LLMs Are New Hires

Every time you start a new session with a large language model, you’re effectively onboarding a brand-new engineer.

The model understands coding — but it has no idea how your business operates, what your architectural patterns look like, how your teams collaborate, or what “good” means in your organisation.

We don’t have the luxury of letting an LLM shadow a senior engineer for months, observe pull requests, attend design reviews, or absorb the organisation’s nuances organically. Instead, we must deliver that understanding through context — structured, high-quality, and relevant every time.

That’s why Context Engineering is essential. Each new interaction with an AI agent requires us to re-establish a shared understanding of standards, goals, and constraints. The richer and more structured the context, the faster the “onboarding” — and the better the outcomes.

The side benefit? Humans thrive on this same structure. Well-formed context makes engineers more productive, decisions more traceable, and knowledge more durable.

3. From Context to Code: Specification-Driven Development

Once we’ve built up high-quality context, we can start turning that context into action through Specification-Driven Development (SDD) — a workflow that transforms structured context into executable specifications for coding agents.

Using tools like Spec Kit, we can orchestrate this process step by step:

- Constitution – Load foundational context, standards, and objectives into the workflow to ground subsequent steps.

- High-level specifications – Define what to build (requirements, user stories, and goals).

- Clarifications – Resolve ambiguity and fill gaps before technical planning.

- Technical plans – Create detailed implementation plans aligned to the chosen tech stack.

- Tasks – Generate actionable implementation tasks.

- Artifact analysis – Check consistency and coverage across generated artifacts.

- Implementation – Have the coding agent execute the tasks to build the feature according to plan.

Each of these artifacts is stored within the repository — versioned, reviewable, and auditable — giving both humans and coding agents shared visibility and traceability. Furthermore, these artifacts can be refined and tuned by humans and agents at each step in the SDD process.

By curating these executable specifications, we create a clear, structured foundation that allows multiple coding agents to work in parallel — each implementing different tasks marked “parallel” within a specification, or on separate specifications within the same codebase. This parallelisation becomes practical and safe when the codebase is modular, well-structured, and covered by robust tests.

Where systems are not yet ready for this level of parallelised automation, SDD provides a path forward. We can use the same specification-driven workflow to incrementally refactor and improve codebases — step by step — until they reach the maturity required for safe, concurrent AI collaboration.

In this way, SDD is both a delivery framework and a modernisation engine. It helps us scale the work of development safely across human and AI contributors while continuously improving the technical foundation underneath.

This approach eliminates brittle “long prompt” interactions and replaces them with a repeatable, inspectable workflow, where each stage can be reviewed, refined, and reused.

4. The New Skill: Context Engineering

The emerging skill in AI-augmented delivery isn’t prompting — it’s context engineering.

Prompting is transient; context endures. Prompting says “do this”; context says “here’s how we do things here.”

In this new paradigm, the role of the engineer evolves from coder to context architect — someone who builds and maintains the structured environment that allows AI agents to work effectively and safely within enterprise constraints.

And while this helps AI coding agents perform better, it also strengthens human teams — creating a shared foundation of clarity, standards, and alignment across the delivery ecosystem.

Conclusion

Every engineer — human or AI — performs best when given clarity, context, and structure.

By combining built-in quality with Context Engineering and Specification-Driven Development, we create an environment where AI coding agents and human engineers work together — safely, efficiently, and at scale.

We can’t teach LLMs our culture through osmosis — we must encode it through context. That’s how we transform modernisation from an experiment into an engineered system of continuous understanding.